Belief comes in two kinds

Does the UAP literature firehose need a framework to navigate it?

[This article is free of any AI content]

It’s been several months since my earliest articles in June following the Grusch revelations. I’ve spent the three months since then absorbing the UFO / UAP literature to be able to discuss on a more even basis as more seasoned folks than myself. (I curated a list of just about all the sources I reviewed, which I’ll probably share here in Notes and/or over Twitter, so folks know from what basis I’m speaking from for upcoming articles. )

But any future content begs this meta discussion on belief systems & our collective epistemology.

Two kinds of belief

Ever since the Grusch whistleblowing, I’ve been trying to figure out what it means to decide whether or not any individual piece of evidence in the UAP lore or UAP literature is in fact an accurate portrayal of historical reality. (In so doing, I’ve bypassed any scholarly studying of epistemology, so apologies if my framework bugs anyone for its ignorance of what’s been done before).

In my head what I’m doing is deciding what the weights are for two things:

1. How much I personally believe an item, and

2. How much would I get behind that item, without any anonymity, to all of my friends & colleagues.

Think of it like how thick is any segment of scaffolding in a belief system. The weights are the thickness, or strength, of the individual segments (the evidence/testimony items) that make up the scaffolding (where scaffolding is the entire topic). I might be passionate about a certain item so I have an individual perception based on my A-weights of what makes the overall topic valid or invalid. But I believe that someone else in my network should only be subjected to believing in the B-weights of the topic - much flimsier but a more epistemically accurate perception of the belief system’s structure.

In that way, I maintain two probability values simultaneously in my head for any piece of information (evidence or testimony). When I list those probabilities here, I use a special format: A / B. A is how much I personally believe an item, and B is how much I get behind it with the imprimatur of my personal brand. In a way, B is really like saying, “How much do I think anyone I know really *should* believe the item based on what standard of evidence the item meets, independent of how much I believe it. In practice, as a rule I think that B is always less than A; If it’s an issue I’m passionate about, and therefore the tip of the spear in my network for trying to understand if there’s any validity to it, I never think people in my network should believe something of incomplete provenance as much as I believe it. This works both ways - there are unrelated issues that individuals in my network are probably passionate about, and I don’t think I deserve to have to hold as high a view of their individual belief in any item related to their issue as they do. But nor should I write off what they believe altogether. So their B’s for items in that issue correspond to what I feel my A’s are for those same items, in which case my A’s should be no greater than their B’s.

Example: data threads followed shortly after the Grusch revelations;

In UAP there is an enormous amount of unsourced claims, and a tiny, tiny throughline of credibly-sourced claims. Each of us has to form our own personal corpus of what parts of the UAP lore / evidence / testimonies are worth believing, to what degree, and which are not. So for example, besides the Navy videos (‘tictac’, ‘go-fast’), Grusch’s public testimony, the missile silo testimonies, and (some of) the Greer witness testimonies, a couple of pieces of content that I hold in rather high regard (in Belief-item format: A / B ) is:

Astronaut Edgar Mitchell:

Roswell | WSMR intervention by NHI

95% / 60%

Philip Corso:

Roswell | providing NHI tech artifacts to industry

75% / 25%

Note the commonality between these two: Roswell. Suppose Roswell was debunked tomorrow. Then the A & B probabilities behind these evidence items would crater (no pun intended) to zero. However suppose that irrefutable proof of the overall general Roswell account surfaced. Then the A & B probabilities for the non-Roswell aspects of these two evidence sources (Mitchell’s & Corso’s) would increase significantly:

1) That White Sands Missile Range (WSMR) weapons tests saw intervention by UAP.

2) That the Army provided NHI artifacts to industry to reverse-engineer.

To what benefit?

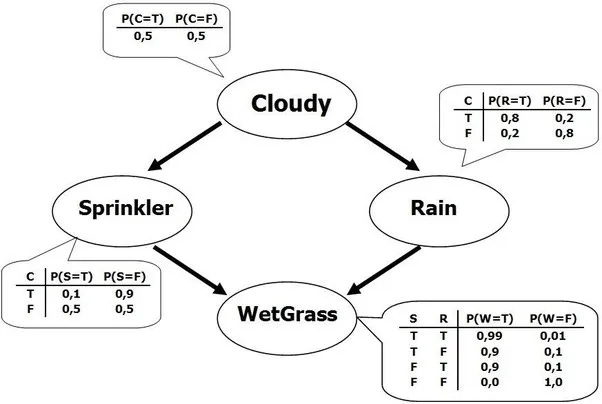

If each of us following the UAP topic (or any controversial topic with uncertain, unagreed upon standards of evidence in play) intuitively creates our own set of weights, distinguishing between our own individual standard of evidence and what we think should be the popular standard of evidence , then we can collectively create a very useful tool: We can create a Bayesian network of evidence that can be popularly accessed & popularly updated - - i.e. crowdsourced.

(Image links to Towards Data Science source explainer article by Devon Soni)

But why A & B?

I think that people want to see not just what the public, collective belief weighting system ought to be in the opinions of the crowdsourced contributors, but also to see what the crowdsourced contributors themselves believe individually. I think there is people wading into the debate deserve to see these “hunch” or “intuition”-level weights of those willing to share them. And I think the hunches or intuitions of contributors deserve to be seen, without fear of reputation fallout (i.e. anonymously), if they so wish.

On building

While there are some nascent tools for creating a Bayesian network, my limited research so far suggests such a crowdsourced Bayesian network tool still would need to be purpose built. Know any good software engineers..? 😁. I think a reasonable goal would be to be able to generate interactive networks of this variety:

update 19-Sep-23: The darker areas could indicate, for example, the information clusters that have more crowdsourced belief weight, and the lighter areas have less belief weight (The modes could be shifted between A & B at the user’s whim). Crediting Reddit user TallWhiteNThe7Greys for correctly identifying the above as a ‘heatmap’ /update

What do you think? Would you like to see such a tool get created? Make yourself heard in the comments or on Twitter at @BlockedEpistem . Subscribing helps motivate me to write more articles like this. And yes, full disclosure in the future it’s possible I’ll paywall / paydelay some content, certainly an option I’d like to keep open.

[Technical update - 30-Oct-2023]

Above poses a framework for encoding belief systems into a format that lends itself to programmatic processing. I’ve been thinking about the zero-reference used for the probability estimates; I think that the baseline should be 50% (instead of 0%) in order to represent indifference to the likelihood of an assertion being true or emphatically untrue. Therefore 0% represents a belief that an assertion is false with full certainty on the part of the individual rating the assertion.]. This aligns with intuitive expressions like “50/50” to mean being evenly uncertain on something.